China Becomes Biggest Variable NVIDIA AI Chip Staged in 2024

China becomes the biggest variable NVIDIA AI chip staged “ambush on all sides” In the past two days, “Huang Renxun wore Northeastern flowers and danced” has been on the hot search.

Nvidia founder and CEO Huang Jenxun, at the company’s annual meeting in China, wore a neat short white hair, a black T-shirt, a Northeastern flower vest, a red handkerchief in each hand, and danced with enthusiasm Northeast Errenzhuan. Videos and photos of this hot dance began to be widely circulated on social media on the evening of January 20.

Before Huang Renxun’s hot dance stole the spotlight, Nvidia’s market value hit a new high. As of January 22, Eastern Time, NVIDIA AI Chip closed at US$596.54 per share, with a total market value of US$1.47 trillion. Four days ago, Meta CEO Zuckerberg said that it will receive approximately 350,000 H100 GPUs from NVIDIA AI Chip by the end of 2024. Since 2024, Nvidia’s stock price has increased by more than 20%.

However, NVIDIA AI Chip at its peak has mixed blessings and sorrows. In the NVIDIA AI Chip market, Nvidia’s king position is difficult to shake in the short term. However, in addition to its old rival AMD, which is closely chasing after it, many super technology companies such as Microsoft, Google, Amazon, and Huawei are trying to share the pie. Affected by the United States’ increasingly tightened chip export restrictions to China, the Chinese market has become its biggest variable. Against this background, Nvidia is faced with the problem of resolving the loss of the Chinese market.

NVIDIA AI Chip CEO wears “Northeast Flower”

Huang Renxun has not entered mainland China for several years. There were rumors of coming to mainland China in June 2023, but the trip did not take place. It wasn’t until January 2024 that it was actually realized.

The “secrecy” of Huang Renxun’s visit was very good, and his arrival and departure seemed “quiet”. It was not until the evening of January 20th that some of his hot dance videos were leaked and confirmed by the media, and by then he had left.

According to China Business News, Huang’s trip did not involve meetings with government officials or major business announcements. The main purpose was to “spend a good time” with Chinese employees.

The leaked video shows that in addition to dancing in a Northeastern floral vest, Huang Renxun also gave a speech at the annual meeting and hosted a lottery.

In this regard, Guo Junli, research director of IDC Asia Pacific, said that Huang Renxun recently visited the offices of Beijing, Shanghai, and Shenzhen, participated in the China annual meeting activities, and had face-to-face communication and exchanges with the team to discuss the China market strategy.

Public information shows that Nvidia has nearly 3,000 employees in mainland China, involving marketing, sales, R&D and other functional departments, and has offices in Beijing, Shanghai, Shenzhen and other places.

“Huang Renxun has always attached great importance to the Chinese market. In the face of international geopolitics, he has tried every means to serve the Chinese market.” IDC Asia Pacific Research Director Guo Junli analyzed, “This trip not only strengthens the importance of the Chinese market in NVIDIA’s global strategic layout, but also It enhances the company’s confidence in the future layout of the Chinese market.”

On December 6, 2023, Huang Renxun publicly revealed that the Chinese market accounted for 20% of Nvidia’s total revenue. It can be seen that the Chinese market is a crucial market for Nvidia. “The Chinese market is one of the world’s largest consumer markets, including computers, communications and other fields. The volume of consumption and purchases is very huge. There is no doubt that NVIDIA attaches great importance to the Chinese market.” Liang Zhenpeng, a senior industrial economics observer, said.

Come to China to “stabilize people’s hearts”

As we all know, the United States has repeatedly tightened restrictions on NVIDIA AI Chip exports to China in the past two years, and Nvidia is therefore facing the problem of losing market share in China.

At the end of 2023, NVIDIA released the H200 chip, which is planned to be officially available in the second quarter of 2024. The H200 is known as the “most powerful” NVIDIA AI Chip in history due to its excellent performance of nearly 2 times higher inference speed than H100, while reducing energy consumption by half.

Not only does the H200 miss out on Chinese companies, but other NVIDIA products will also face this fate. In October 2022, affected by a series of NVIDIA AI Chip export restrictions in the United States, NVIDIA’s A100 and H100 AI processors were unable to be provided to the Chinese market. NVIDIA could only achieve this through the A800 and H800 chips that “reduce volume without reducing price.” Special offer for the Chinese market.

In October 2023, the United States further tightened its chip export controls to China. A800 and H800 chips can no longer be sold to the Chinese market. The RTX 4090 chip aimed at “enthusiast-level” computer gamers has also been removed from the Chinese market. The new regulations It will come into effect on October 23, 2023.

After the implementation of the new U.S. chip sales restrictions on China, Chinese technology companies have mentioned its impact.

On November 15, 2023, Tencent President Liu Chiping said at the Tencent Holdings (00700.HK) financial report conference call that Tencent currently has one of the largest NVIDIA AI Chip inventories and has enough chips to support Tencent’s Hunyuan large model. The update and development of the modern era. However, the control does affect Tencent’s ability to lease AI chip resources as cloud services.

On the evening of the next day, Alibaba Group’s financial report for the second quarter of fiscal year 2024 (that is, the third quarter of 2023) mentioned that Alibaba will no longer promote the complete spin-off of Cloud Intelligence Group. The main reason is that the United States is expanding its advanced computing Chip export controls have brought uncertainty to the prospects of Alibaba Cloud Intelligence Group.

This time, NVIDIA’s strategy is to further “significantly reduce” the computing power, while the price is only “slightly reduced.” NVIDIA has developed three “improved” chips that comply with the latest U.S. export rules, namely HGX H20, L20 PCle and L2 PCle. Mass production is expected to begin in the first quarter of 2024.

A research report from Semianalysis, a semiconductor research institute, shows that the H20 used for AI model training provides 96GB of memory, 4TB/s memory bandwidth and 296 teraFLOPS FP8 performance. Theoretically, its overall computing power is about 80% lower than that of the NVIDIA H100 GPU chip.

The price has dropped slightly, but the overall computing power has dropped by 80%, which has led to a lack of enthusiasm among Chinese companies. NVIDIA released its third quarter 2023 financial report on November 22, 2023. The financial report mentioned that after the implementation of new US regulations, sales in China are expected to drop significantly in the fourth quarter.

TrendForce analysts believe that 80% of high-end chips for Chinese cloud computing companies currently come from NVIDIA, and this may drop to 50%-60% in the next five years. Although there was no business meeting during Huang Renxun’s visit this time, the strategic significance of “stabilizing people’s hearts” is highlighted.

The new pattern of “competition and cooperation”

In recent days, Nvidia’s two major customers, OpenAI and Meta, have staged a “go left, go right.” OpenAI is no longer satisfied with purchasing GPUs from NVIDIA, and has reached out to the upstream production side of GPUs. On January 20, Bloomberg reported that OpenAI CEO Sam Altman is raising funds to build semiconductor production facilities to produce processors for artificial intelligence (AI) applications.

Another technology giant, Meta, chose to continue to increase its investment in Nvidia GPUs. On January 18, 2024 Eastern Time, Meta CEO Zuckerberg announced that Meta plans to obtain approximately 350,000 H100 GPUs from Nvidia by the end of 2024. Counting other GPUs, the total computing power it has will be close to the computing power that the 600,000 H100 can provide.

Analysts at Raymond James estimate that the Nvidia H100 will sell for between US$25,000 and US$30,000, and on platforms such as eBay, it may even cost more than US$40,000. To purchase an H100 for 350,000 yuan, Meta would need to shell out a huge investment of approximately US$9 billion.

Altman was worried about the shortage of GPUs and the huge costs it would bring. This may be one of the main reasons why he chose to build a GPU factory. At the same time, he was trying to minimize the risk of Nvidia’s “stuck” on it.

NVIDIA customers who choose to get involved in the GPU upstream territory include not only OpenAI, but also a long list of names such as Google, Amazon, Tesla, Alibaba, Baidu, and Microsoft. They have all announced that they will develop their own NVIDIA AI Chips.

“Major Internet companies have started to manufacture cores themselves. We believe that it will have little impact on the overall industry competition in the short term, because Nvidia has all-round leading advantages in technology, products, ecology, experience, etc.,” Guo Junli said, “In the long term, , as major companies are able to continue to increase their self-sufficiency share, it may have a certain impact on the competitive landscape of NVIDIA AI Chips.”

Due to Nvidia’s production capacity constraints, the delivery cycle of products including H100 is relatively long. Omdia statistics show that Nvidia sold approximately 500,000 A100 and H100 computing cards in the third quarter of 2023. The delivery cycle of servers built on H100 ranges from 36 weeks to 52 weeks.

This undoubtedly allows Nvidia’s competitors to see opportunities. Nvidia’s old rival for many years is AMD. In the field of GPU, Nvidia and AMD almost share the global market share, with the former accounting for about 80% and the latter about 20%.

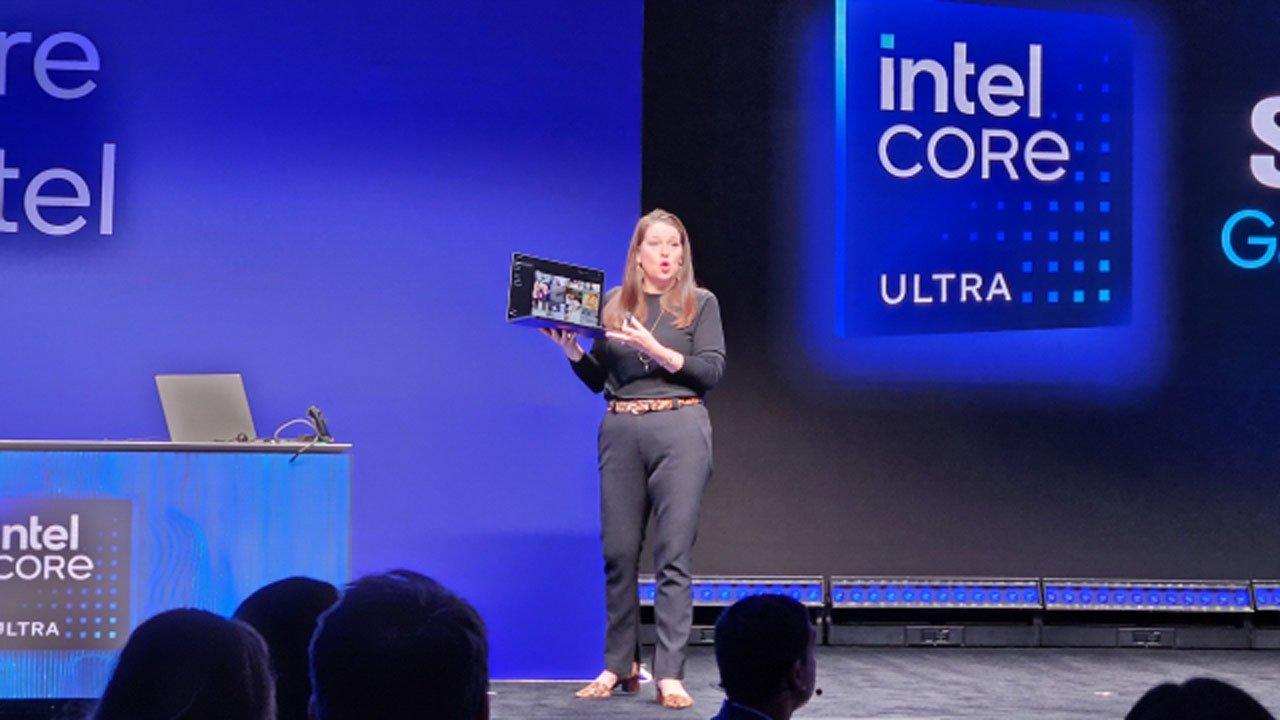

On December 6, 2023, AMD launched MI300x, claiming to perform better than NVIDIA’s H100 in an attempt to make a comeback. However, the limelight was stolen by Nvidia’s H200 released a week later. Intel also announced that it will launch a GPU product called Gaudi 3 in 2024, with performance exceeding Nvidia’s H100.

Liang Zhenpeng believes that NVIDIA AI Chips cover a wide range of areas, and each company has different areas of expertise. Nvidia is naturally good at GPUs, that is, graphics processors; Intel is good at CPUs, that is, central processing units, etc. The relationship between enterprises is not a blind competition, but a relationship of “competition and cooperation”, with upstream and downstream industry chain cooperation. , it is normal to purchase from each other.

Who is the biggest variable in the era of artificial intelligence?

ChatGPT, created by OpenAI, is the leader of this wave of generative AI. The three core elements of generative artificial intelligence are data, algorithms, and computing power. And computing power is Nvidia’s weapon to reach the top. A report released in October 2023 showed that OpenAI currently mainly uses Nvidia’s A100 and H100 GPUs in its popular ChatGPT service.

Large model training such as ChatGPT-4, Gemini, Llama 2 and other models are inseparable from NVIDIA’s H100 GPU. This is one of the keys to NVIDIA’s invincibility. “Grabbing Nvidia’s GPUs” has been the norm for major technology companies around the world in the past year.

The latest report from the research organization Omdia gives a set of data. In 2023, Microsoft and Meta each snatched away 150,000 Nvidia H100 GPUs. Google, Amazon, and Oracle purchased 50,000 units each, and Tencent purchased 50,000 units. H800 GPU, Baidu and Alibaba grabbed 30,000 and 25,000 A100 GPU respectively.

Currently, 82% of the global data center AI acceleration market share is occupied by NVIDIA. At the same time, NVIDIA’s market share in the global AI training field is as high as 95%. Nvidia also leads the Chinese market. IDC research shows that in 2022, China’s AI accelerator cards (AI training chips) will be shipped about 1.09 million, of which Nvidia’s market share is 85%.

It can be said that OpenAI has successfully detonated the artificial intelligence revolution by itself, turning things around for Nvidia, which has been “selling shovels” for many years. 2023 will be Nvidia’s year of dominance. In 2023, Nvidia’s stock price soared by 234%, and its market value reached one trillion U.S. dollars.

The artificial intelligence revolution has exploded the demand for upstream GPUs. According to estimates, the compound annual growth rate of peak computing power demand for global large model training terminals from 2023 to 2027 is 78%. In 2023, the total amount of A100 converted into all the computing power required for the global large model training end will exceed 2 million.

The Chinese market is affected by the US sales restriction policy and may become Nvidia’s biggest variable at this time. If Nvidia cannot resolve supply problems, Huawei may become the biggest potential beneficiary.

Huang Renxun also publicly stated on December 6, 2023 that Huawei is one of Nvidia’s “very strong” competitors in the competition to produce the “best” artificial intelligence chips. Huang Renxun emphasized that Huawei, Intel and the growing number of semiconductor startups pose a severe challenge to Nvidia’s dominance in the artificial intelligence chip market.

Baichuan Intelligence founder and CEO Wang Xiaochuan once revealed that the current large model industry is divided into training computing power and inference computing power. The combined cost of the two accounts for more than 40% of the total cost of large models.

Huawei Ascend and several other companies are among the few domestic GPU chip companies that make AI training chips. In particular, Huawei Ascend 910B is benchmarked against Nvidia A100. Previously, Jiang Tao, Secretary and Vice President of iFlytek, said that the current capabilities of Huawei’s Ascend 910B are basically comparable to Nvidia’s A100.

The good news for challengers is that the training cost of large models is gradually decreasing, while the inference cost is constantly eating into the training cost. On December 7, 2023, AMD CEO Su Zifeng mentioned that the global data center AI accelerator market will reach US$400 billion in 2027. Of this US$400 billion, more than 50% of the market will come from inference demand. .

Zhou Hongyi, chairman and CEO of 360, also discovered a trend in Silicon Valley. He said that technology manufacturers such as OpenAI, Microsoft, Meta, Amazon, Qualcomm, etc. are all making inference chips. “In the next one to two years, the large-scale model inference process will We no longer need to rely on expensive GPUs to implement technology iterations and reduce computing power costs. I personally feel that this cost will not be a problem soon.”

Another moat of NVIDIA is CUDA (Compute Unified Device Architecture), a series of software, hardware and extension systems developed from the CUDA programming framework. Nvidia has been building this system since 2012.

Huawei’s Ascend 910B is limited by CUDA. Because it is only compatible with older versions of CUDA, downstream customers are hesitant and delay the large-scale application cycle.

However, more forces are beginning to intentionally reduce the application of CUDA. Recently, Intel CEO Kissinger publicly stated that MLIR, Google, and OpenAI are all turning to a “programming layer based on the Python language” to make AI training more open.

Precisely because of the many potential risks, even though he is at the top of the GPU field, Huang Renxun’s sense of crisis is everywhere. “We don’t need to pretend that the company is always in danger. We (are) always in danger,” Huang said in a speech in November 2023.

Roses and thorns go hand in hand. This may become NVIDIA’s survival situation for a long time to come, and it will also become a new test question for its helmsman Huang Renxun.

See More:

Tesla Stock Price Plummets and Even a Recall: Elon Musk Has Been Bad News

The Big 7 Financial Reports Kick off The AI Competition

Taylor Swift Surpasses Elvis: What Is The Secret Of ‘Taylornomics’?

Leave a Comment