Technology To Recognize Human Emotions In Real Time Has Emerged

A domestic research team has developed a technology that can immediately Recognize Human Emotions. This technology is expected to be developed into a device that can be worn on the face and applied to a variety of fields.

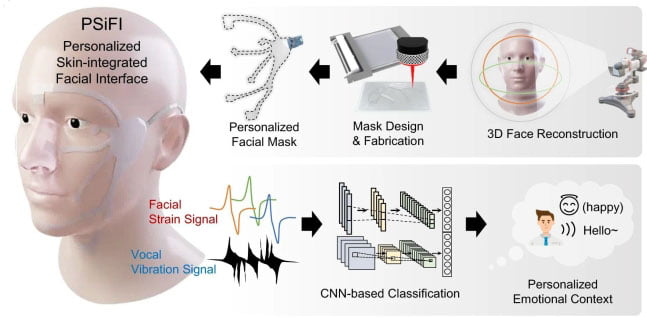

Professor Jiyoon Kim’s research team in the Department of Materials Science and Engineering at the Ulsan National Institute of Science and Technology (UNIST) developed the world’s first ‘wearable Recognize Human Emotions technology’ that recognizes human Emotions in real-time. Deformation of facial muscles and voice were simultaneously detected, analyzed by artificial intelligence, and converted into emotional information.

Ulsan National Institute of Science and Technology (UNIST) announced on the 29th that the research team led by Professor Jiyoon Kim of the Department of Materials Science and Engineering has developed the world’s first ‘wearable human emotion recognition technology’ that recognizes emotions in real time.

The research team simultaneously detected facial muscle deformation and voice, sent multi-modal data wirelessly, and obtained emotional information using artificial intelligence (AI). Multimodal refers to the concept of exchanging information through multiple channels, such as visual or auditory.

The research team created a wearable device to analyze emotions anytime, anywhere. This system is based on the ‘friction charging’ phenomenon, in which two objects separate into positive and negative charges when they rub and then separate. The research team explains that since it can generate power on its own, there is no need for an external power source or complicated measuring equipment to recognize data.

The device uses a semi-hardening technique that maintains a soft solid. Using this technique, a conductor with high transparency was produced and used as an electrode for a triboelectric device. We also created personalized masks that can be photographed from various angles.

This system detects facial muscle changes and vocal cord vibration and integrates the data into emotional information. The research team emphasized that the obtained information could be used to implement a ‘Digital Concierge ‘ that provides customized services to users in a digital environment.

The developed system showed high Recognize Human Emotions with little learning. In addition, we not only collected multimodal data, but also performed ‘transfer learning’ using the data. In fact, this system is capable of providing a customized service that recommends music, movies, and books by identifying individual emotions in each situation.

Professor Ji-yoon Kim said, “In order for people and machines to interact at a high level, they must be able to collect various types of data and handle complex and integrated information.” He added, “This research will utilize the next-generation wearable system to “It shows that emotions, a complex form of information, can also be utilized,” he said.

This research was supported by the National Research Foundation of Korea and the Korea Institute of Materials Science. The research results were published online on the 15th of this month in the international academic journal ‘Nature Communications’.

See More:

Tesla Will Invest USD 500 Million To Build Dojo Supercomputer In New York

China Becomes Biggest Variable NVIDIA AI Chip Staged in 2024

The Big 7 Financial Reports Kick off The AI Competition

Tesla Stock Price Plummets and Even a Recall: Elon Musk Has Been Bad News

Leave a Comment